Building Production-Grade ML Systems: A Technical Deep Dive

From feature stores to model monitoring, here's the complete technical architecture for scaling ML from prototype to production.

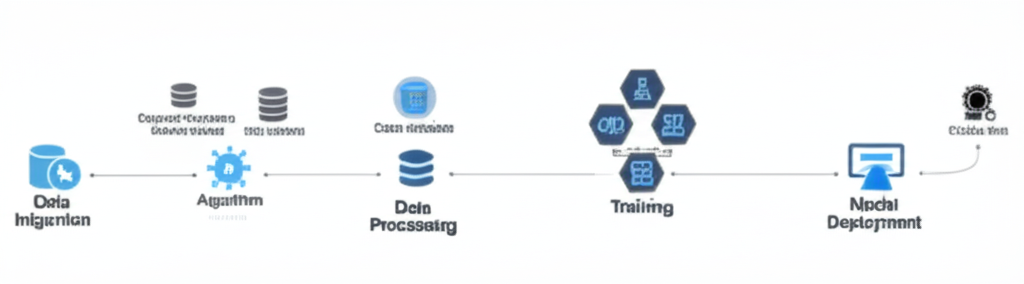

The Production ML Stack

Building a machine learning model is the easy part. The hard part is architecting the infrastructure to train, deploy, monitor, and iterate on that model at scale—reliably, securely, and cost-effectively.

Here's the complete technical architecture we implement for clients.

Layer 1: Data Infrastructure

Data Lake & Warehouse

- Storage: S3/GCS for raw data (parquet/delta format for efficient queries)

- Warehouse: Snowflake, BigQuery, or Redshift for structured analytics

- Streaming: Kafka/Kinesis for real-time data ingestion

Data Versioning

Models trained on different data versions must be reproducible. We use:

- DVC (Data Version Control) for dataset versioning

- Delta Lake/Iceberg for time-travel queries

- Metadata catalogs (DataHub, Amundsen) for lineage tracking

Layer 2: Feature Engineering

Feature Store

The most critical (and most overlooked) component. A feature store ensures:

- Training-serving consistency: Same feature computation in offline training and online inference

- Feature reuse: Multiple models share features

- Low latency: Pre-computed features served in <10ms

We typically implement:

- Feast (open-source): Good for startups/mid-size companies

- Tecton (managed): Enterprise-grade, worth the cost for large-scale deployments

- Custom: When you need domain-specific transformations

Layer 3: Model Training & Experimentation

Compute Infrastructure

- GPU clusters: Kubernetes with NVIDIA GPU operator for distributed training

- Spot instances: 70% cost savings for interruptible workloads

- Experiment tracking: MLflow or Weights & Biases

Hyperparameter Optimization

Automated search (Optuna, Ray Tune) integrated with distributed training. We typically see 10-20% accuracy improvements from proper hyperparameter tuning alone.

Layer 4: Model Deployment

Serving Architecture

Three common patterns:

1. REST API (Most Common)

Model Container (TensorFlow Serving / TorchServe)

↓

API Gateway (rate limiting, auth)

↓

Load Balancer (auto-scaling)2. Batch Inference

For non-real-time predictions (e.g., daily churn scores). Spark/Airflow orchestrates batch jobs.

3. Edge Deployment

For latency-critical applications. We use ONNX Runtime or TensorFlow Lite for on-device inference.

CI/CD Pipeline

- Data scientist commits model to Git

- CI runs validation tests (data checks, model performance benchmarks)

- Model is containerized and pushed to registry

- CD deploys to staging → canary (5% traffic) → full production

- Automatic rollback if error rates spike

Layer 5: Monitoring & Observability

What We Monitor

- System metrics: Latency (p50, p99), throughput, error rates

- Data drift: Input distribution shifts (KL divergence, PSI)

- Prediction drift: Output distribution changes

- Model performance: Accuracy, precision, recall (requires ground truth labels)

- Business metrics: Revenue impact, user engagement

Alerting Rules

We set up alerts for:

- Latency spikes (p99 > 200ms)

- Error rate increase (>1% of requests)

- Data drift detected (PSI > 0.2)

- Model accuracy drop (>5% below baseline)

Layer 6: Governance & Security

Model Lineage

Track every model back to:

- Training dataset version

- Code commit (Git SHA)

- Hyperparameters

- Training metrics

Security Controls

- Data encryption (at rest and in transit)

- Access controls (RBAC for model deployment)

- Audit logs (who deployed what model when)

- Model explainability (SHAP, LIME) for regulatory compliance

Cost Optimization

ML infrastructure can get expensive fast. We optimize:

- Training: Spot instances, early stopping, gradient accumulation

- Inference: Model quantization (INT8), batching, caching

- Storage: Data lifecycle policies (archive old training data)

The Full System

When all layers work together:

- Data scientists iterate fast (experiment tracking, automated training)

- Models deploy reliably (CI/CD, containerization)

- Operations stay ahead of issues (monitoring, alerting)

- Business trusts the system (governance, explainability)

That's production-grade ML. It's not about one model—it's about building a platform that enables hundreds of models to succeed.

Sean Li

Founder & Principal Consultant at Duoduo Tech. Specializes in production-grade AI infrastructure, causal inference, and domain-specific ML applications across Life Sciences, Finance, and Media.